(amgun/Shutterstock)

Google Cloud made a slew of announcements across today at its annual user conference, Next, including new processor options for AI training, new GenAI capabilities in Vertex, an AI model for generating videos, new GenAI features in BigQuery and Looker, new AI-powered security functions, and even a new security-focused Web browser called Chrome Enterprise Premium.

Let’s start with hardware, which is really just another service in Google Cloud.

The cloud big said the latest iteration of its Tensor Processing Unit, TPU v5p, can train large language models 3x faster than the previous iteration. There’s also A3 Mega, a new processing option based on Nvidia H100 GPUs that offers twice the GPU-to-GPU networking bandwidth, which will bolster LLM training as well as inference. See a blog post by Mark Lohmeyer, the company’s vice president and general manager of compute and ML infrastructure, to learn more.

Google Cloud also unveiled Axion, its first Google-designed ARM-based CPU, which is designed for general purpose workloads. According to Lohmeyer, Axiom provides up to 50% better performance and up to 60% better energy efficiency than comparable X86 based instances and 30% better performance than the fastest ARM based instances available in the cloud today. For more info on Axion, read this blog post by Amin Vahdat, the company’s vice president and general manager of Machine Learning, Systems, and Cloud AI.

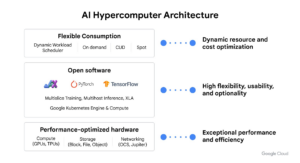

On the storage front, the company added a block storage offering to its AI hypercomputer architecture called Hyperdisk ML. Designed for inference and currently in preview, Hyperdisk ML will deliver up to 12 times faster model load times compared to common alternatives, Lohmeyer said. “We’ve also enhanced Parallelstore, our high performance parallel file system with caching capabilities, to keep data closer to the compute, providing 3.9 times faster training times,” Lohmeyer said in a press conference.

Vertex AI, Google Cloud’s AI development and runtime platform, is gaining new capabilities. For starters, there are some new additions to Vertex AI model garden, which already sports 130 models. New additions include Gemini 1.5 Pro and Imagen 2.0 from Google, Claude 3 from Anthropic, and a variety of open source models, including Mistral 7B, Mixstral, and Code Gemma. For more info, see this blog post by Vahdat.

The preview of Gemini 1.5 Pro will provide a context window of up to 1 million tokens, “allowing you to process a lot more information with one shot,” Google Cloud CEO Thomas Kurian said in the press conference.

An update to Vertex AI Agent Builder will provide better “grounding,” Kurian said, and enable users “to use Google search…to ground against your enterprise databases, including Google databases.”

The company also introduced a new retrieval augmented generation (RAG) function called vector search “which provides essentially a self-serviced, easy to use, fully managed Retrieval Augmented Generation platform,” Kurian said.

Gemini is being supported in two Google Cloud analytics properties: BigQuery and Looker.

Google Cloud is allowing users to fine-tune Gemini models using data they have stored in the data analytics warehouse, BigQuery. It’s also using Gemini’s GenAI capabilities to help with data preparation, engineering, and analytics tasks within BigQuery. The Goog is also providing “direct integration” between Vertex AI and BigQuery, which it says “enables seamless preparation and analysis of multimodal data such as documents, audio and video files.”

In the data analytics and BI product Looker, Gemini will allow “business users to chat with their enterprise data and generate visualizations and reports–all powered by the Looker semantic data model that’s seamlessly integrated into Google Workspace,” writes the company’s vice president and general manger of data analytics, Gerrit Kazmaier, in a blog post.

Google is also bringing GenAI to database country, namely MySQL and Postgres. The company says its “Gemini in Databases” launch will include three deliverables: providing a SQL code-assist in Database Studio; helping to manage customers’ database “fleets” in Database Center; and helping out in Database Migration Service. Andi Gutmans, the company’s general manager and vice president of database engineering has more details in his blog.

AlloyDB AI is also getting a bump up in capability when it comes to vectors. According to Gutmans, AlloyDB AI is getting a new pgvector-compatible index based on Google’s approximate nearest neighbor algorithms. “In our performance tests, AlloyDB AI offers up to four times faster vector querying than the popular ‘hnsw’ index in standard PostgreSQL, up to eight times faster index creation, and typically uses 3-4 times less memory than the HNSW index in standard PostgreSQL,” Gutmans writes. The Google-developed vector index is in tech preview on AlloyDB Omni and will be supported on AlloyDB on Google Cloud soon.

Over on Google Distributed Cloud (GDC), the company’s hybrid cloud offering that combines cloud and on-prem capabilities, there are several new AI features to talk about, including:

- Support for Nvidia GPUs

- Support for GKE, Google’s distribution of Kubernetes

- Support for open AI models like Gemma and Llama 2;

- Support for AlloyDB Omni for Vector Search

- And support for Sovereign Cloud, giving customers a fully “air-gapped” configuration for customers concerned with local operations and full survivability.

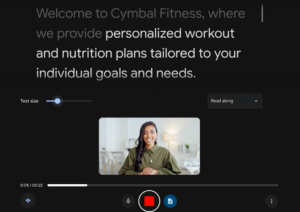

Several new AI capabilities are coming to Google Workspace, the company’s offering to help teams collaborate. One to keep an eye on is the formal launch of Google Vids, an AI-powered video creation app that it teased customers with at Next last June.

“Vids is your video editing, writing, production assistant, all-in-one,” said Aparna Pappu, the vice president and general manager of Google Workspace, at a press conference. “Customers will now be able to create everything from product pitches to training content to celebratory team videos and much more.”

Google has integrated Vertex AI into Workspace, with the idea of making it easier to build AI-powered workflows into the Google offerings that users work in, such as Docs, Gmail, and Sheets. Finally, Google is adding two new AI-powered offerings to Workspace, including one for running AI-powered meetings, and another for bolstering security through AI. Both cost $10 per user per month.

Finally, Google is making several announcements around the integration of security into AI. The company has bolstered the initial integration of Gemini into its Security Operations tool with a new assisted investigation feature. It also has adopted Gemini in Threat Intelligence, which will help security and operations professionals make better sense of the morass of security-data flowing at them.

“This allows defenders to use conversational search to gain faster insight into threat actor behavior based on Mandiant’s growing repository of threat intelligence,” said Brad Calder, the vice president and general manager of Google Cloud Platform and Technical Infrastructure.

Google is also launching a new security-focused Web browser that will provide “a new frontline of defense for organizations,” the company said. Dubbed Chrome Enterprise Premium, the new offering brings advanced sandboxing, zero-trust access controls, real-time checks of websites, and novel exploit mitigation to prevent zero-day vulnerabilities and other attack vectors.

“We see a transformation in the work environment where the browser has become the place where every high value activity and interaction in the enterprise is happening,” Calder said in the press conference. “The browser is essentially serving as the new endpoint, so this braces the endpoint security of enterprises.”

The opening keynote for Google Cloud Next 24 starts at 9 a.m. Tuesday, April 9. You can watch it here.

Related Items:

More AI Added to Google Cloud’s Databases

Google Cloud Bolsters Storage with New Options for Block, Object, and Backup

Google Cloud Levels Up Database Services with Cloud SQL Enterprise Plus