Building architectural models of the embedded computing resources for cyber-physical systems (CPS) has been shown be both practical and pragmatic. Nevertheless, the government and Department of Defense (DoD) contractor community has been slow to adopt this practice. We have observed, firsthand, contractor skepticism on the question of whether the increased cost of building these models is justified. In this SEI Blog post, we examine the problem space and advancements in the design and implementation of embedded computing resources for CPS, the complexities of which drive the need for model building. We also examine the use of traditional methods, such as return on investment (ROI), to justify the added expense of building and maintaining these virtual models, the limitations of ROI in this context, and alternative ways to quantify and rationalize the benefits. Finally, we discuss our vision for using model-based methods to reduce integration and test risk, the potential benefits of that change on CPS, and our recommendations for organizations that want to move forward with a model-based approach in the absence of solid ROI data.

Cyber-Physical System Modeling and ROI

As CPS become more and more complex, the software embedded within these systems becomes a bigger part of the overall technical solution. Consequently, the number of physical parameters monitored and controlled by the system further add to this complexity and can make system behavior hard to predict. Typically, unintended behavior surfaces at the end of product development, during integration and testing. After deployment, the complexity only gets worse, making it harder to predict the impact of incremental updates or modernization efforts.

Despite this growing complexity, CPS development organizations have been slow to adopt a key potential process improvement: the use of virtual architectural models and associated analysis tools, which would help them address this challenge. Why? One common reason is the perceived need to prove that a new method, such as this one, is better than the old way of doing things.

In other domains, model-based design and analysis has been employed by engineers for centuries. For instance, mechanical engineers use finite element models to help improve the quality of their designs and provide an element of verification. They use these modeling tools iteratively in the design process to optimize and to reduce elements of design risk. Bridges collapsing or rockets exploding on the launch pad produce graphic images of design failure, and preventing such failures is paramount. The need to demonstrate financial ROI is secondary to ensuring public safety or maintaining our national standing, and generally the public has been fine with that.

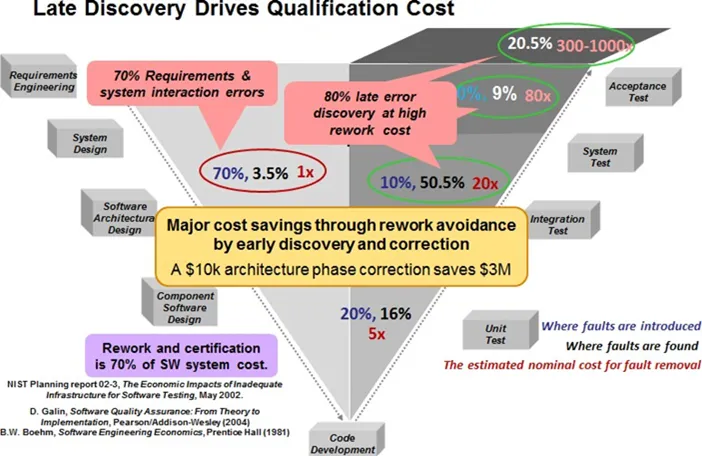

In the case of embedded computing resources for CPS, however, design failures remain invisible until the physical devices are connected. The cost to deal with them at this stage can be up to 80 times greater than that of catching them during design. Models representing the CPS and specifically the CPS’ embedded computing resources can be used during early lifecycle stages to predict these issues (or constraints) and can also be used to evaluate alternate designs that might mitigate future problems.

Cyber-Physical Systems in the DoD

CPS are pervasive in DoD systems. They are often associated with real-time or safety non-functional requirements: providing a function that must be completed under time constraints, (respect of deadline, periodicity, etc.) while ensuring safety invariants (e.g., avoiding unsafe situations that would create an unbearable risk to the system or its environment). CPS adds extra complexity to the system because of the greater degrees of coupling between computations and physical processes.

Due to this interleaving of physics and computer sciences concerns, a single state-of-practice for engineering CPS has yet to emerge. However, understanding the system’s concept of operations (CONOPS) and high-level requirements is key to narrowing down this engineering body of knowledge. For instance, controlling a swarm of unmanned aerial vehicles (UAVs) will rely on control theory, flight dynamics, wireless communication stacks, and distributed algorithms, whereas the definition of a robot operating along with human operators will rely on mechatronics, inverse kinematics, and stringent design methods for real-time safety-critical systems.

Hence, industry standards have been developed to advance CPS, such as simulation techniques to validate a system or digital twin to monitor a system as it is being deployed. While these approaches support the engineering of CPS, they do not address the diversity of analysis methods required. In response, model-based design and analysis has been suggested as a discipline to support the broad need to address performance, safety, security, or behavioral analyses of a system.

Model-Based Design and Analysis

Model-based design, or model-based systems engineering (MBSE) is a key aspect of the DoD’s digital engineering strategy. However, many organizations are not working natively in the MBSE tools. They do their work outside the MBSE environment, then document the resulting design in the MBSE environment. To unlock the true potential of MBSE, however, developers need to build system models and the associated analysis environment, as has been done in other domains, and use the digital environment organically to test design ideas and build quality in.

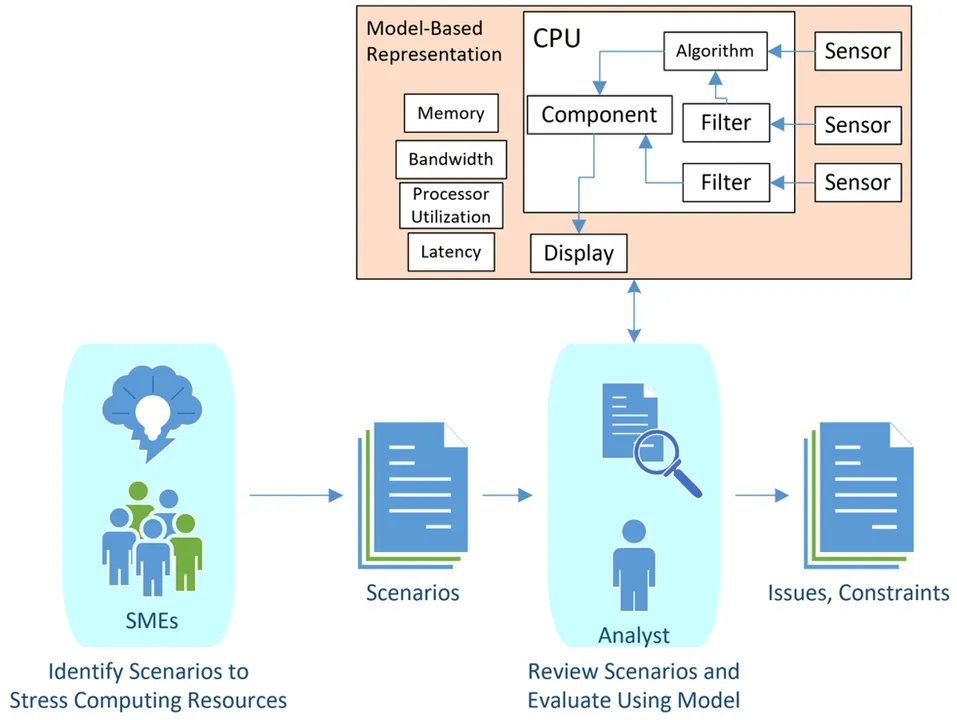

Figure 1 illustrates a mature, model-based design environment. Subject matter experts (SMEs) identify design stressors to uncover elements of weakness in the design, then developers build an environment to evaluate designs as they evolve. A simple example is the use of a wind tunnel to assess air drag in the design of a performance car. Using the analysis environment, the time needed to create a final design can be significantly reduced. With more experience and validation of the analysis methods and tools, the designers and engineers learn to rely on them to provide the early performance prediction needed for design verification.

Figure 1: Notional Model-Based Analysis Process

After constraints have been identified, they are managed by the design team. Having an environment to evaluate scenarios that stress constraints is an essential element for predicting product performance. It is often possible to identify unintended consequences of design decisions by using analysis tools and facilities early in the project lifecycle. Figure 2 depicts the impact of late discovery.

Figure 2: The Gap Between Defect Origin and Discovery (Feiler, Goodenough, Gurfinkel, Weinstock, & Wrage, 2013)

An analysis capability provides early insight into product performance, thereby allowing the design team to improve its management of the technical risk. For DoD CPS, the physical aspect of the equipment constrains the overall analysis. The DoD acquisition timeline presents an additional challenge. Non-DoD CPS (e.g., automotive manufacturers) generally release new product models every year, so the previous year’s analytical tooling needs only minor modification to work for the current year’s model. It is also generally the case that last year’s models functioned properly, so constraints are known and planned for.

In contrast, the DoD acquisition timeline, and the systems engineering process (methodical and rigorous, but also general following a waterfall approach), means that by the time requirements have been allocated to components, it may be too late to make needed changes as part of the management of an emerging technical constraint. It is therefore important for development teams to have an analysis capability throughout the systems engineering processes to help with critical systems engineering decisions.

Managing design and development using analytical tools should provide higher levels of design assurance and fewer issues during integration and test. The Architecture Analysis and Design Language (AADL) is an ideal tool for this purpose. AADL provides the foundations for the precise analysis of safety-critical CPS, and it has been used by the Aerospace Vehicle Systems Institute at Texas A&M under the System Architecture Virtual Integration (SAVI) to address the problem of embedded software system affordability. AADL has also been used by the Defense Advanced Research Projects Agency’s (DARPA) High-Assurance Cyber Military Systems (HACMS) program as part of its MBSE toolkit to build embedded computing systems that are resilient against cyberattacks.

The Opportunity for Cyber Physical Systems

In our experience, an inability to discover issues and constraints until we perform integration and testing, in addition to work required to correct issues found during these activities, is almost certain to cause program delays, cost overruns, and quality concerns. We generally find the following types of issues during integration and testing:

- basic incompatibilities between the components that comprise the system, usually connected through the infrastructure of the system

- unexpected behavior when we connect the components together

- computing resource constraints that limit the system capability, especially when the system is under load

Most DoD contractors we have observed do not use model-based methods to address the root causes of these late-breaking issues. Specifically, they do not use models of computing resources to assess the adequacy of the planned computational, memory, and bandwidth loading. The most common objection we have heard is that the modeling and analysis effort is somehow redundant and not necessarily as effective as traditional methods. Detractors seek conclusive data that demonstrates the ROI, which currently is hard to provide.

We envision a development environment of the future in which integration and testing engineers build a virtual environment to assess the state of development from day 1, refining and elaborating the model(s) as the designs are matured but always able to answer fundamental questions about the system performance, safety, security, modularity, or any other relevant quality attribute. Initial models might be primitive and incomplete, but the virtual environment will still provide an early verification and validation (V&V) check on the systems engineering processes: requirements analysis, functional design, and allocation. Systems engineers would then either use the environment themselves, or they would reach out to the integration and test engineers to conduct what-if analyses. The results of the analyses would get documented in the system design.

Alternative Approaches to Using ROI to Evaluate Model-Based Analysis in CPS

ROI measures an organization’s financial justification for an investment made (i.e., an investment of X dollars will improve some discrete aspect of the product, such as time to market). The improvement may not produce a direct financial benefit, but the investing organization will recognize that the improvement as nevertheless desirable for the business. For example, reducing time to market may enable greater market share.

In the context of DoD CPS, we have observed that systems fail or are constrained unexpectedly when entering integration and testing. An ROI goal for developers of DoD CPS is to mitigate the impact of this inevitable pattern. They could do so in a couple ways:

- Identify the constraints earlier to allow for the planning and execution of mitigation strategies.

- Identify and correct defects and/or issues earlier to improve the overall quality of the system, reducing the likelihood that significant defects will appear during integration and testing.

Cost overruns, schedule delays, and technical compromises have a significant negative impact on CPS programs. Even when extra investment is made to finish them, it is often the case that the finished product is merely good enough instead of what we wanted. Moreover, because the requirements have been paid for, developers must accept that all the requirements that have been implemented (no matter how poorly) are what we wanted. When future changes are proposed to achieve what we want, the objection is often raised that what you got was good enough, and the taxpayer shouldn’t have to pay twice for the same capability.

Creating an ROI Experiment

Wouldn’t it be nice if a documented study showed how to use the model-based methods to improve your process? Several factors make such a study hard, if not impossible, in the DoD CPS context, including:

- The DoD acquisition lifecycle is quite long. By the time we get to integration and testing, we can’t remember what we found during requirements analysis or other early reviews.

- Teams of developers will not have the same skill sets. Trying to set up an experiment to compare apples with apples would be challenging.

- While conducting the study, we need to acknowledge that the organization would still be learning how to apply the new technology.

- Determining what to measure may vary by organization: Different organizations will characterize benefits in different ways.

Consequently, calculating the ROI benefit will vary from organization to organization, possibly from project to project. Is the on-time delivery of capability to the warfighter more valuable than avoiding a $500 million cost overrun and two-year delay in schedule? Model-based methods will support either goal, but the development organization must decide which benefit it values more. Market share, for example, contributes to top line, increased revenue. ROI is a more complicated bottom line calculation.

Organizations must develop objective criteria each time they apply the model-based methods. For example, the main benefit of adopting model-based methods should be less rework required during integration and testing. How will organizations measure this—effort, schedule, number of issues found, or some combination? The following sections examine this question.

How Can You Count Defects that Aren’t There?

In general, applying models and analysis methods earlier in the lifecycle lead to fewer issues later, so fewer defects should be identified during integration and testing. The challenge, however, is determining how to know the relative absence of defects is attributable to the model-based methods? Psychology employs the term counterfactual to describe ruminations on what our lives might have been if we had only followed a different path from the one we chose. In our context, this approach might refer to the number of defects, issues, and constraints we find at system integration and system testing.

For example, organizations may want to determine how many issues they might have caught if only they had used a model-based approach from the beginning. It may be the case that the acceptance of the types of issues (that we find every time we build a CPS) is normal, and that it is hard for us to ask the if-only question, because it is not exceptional. Using counterfactual thinking, we would envision a scenario in which we had employed model-based methods as an integral part of the design process and use the outcomes to justify the investment.

Post-Mortem Analysis

An organization might also justify a model-based methods approach by using prior project data to illustrate what could have been if only we had applied model-based methods. By itself, this method would only identify opportunities. The organization would then need to figure out how to incorporate model-based analysis into its process in a way that would identify issues earlier in lifecycle. This method is useful for organizations to identify process improvement opportunities.

A post-mortem analysis generally employs the following process:

- Identify a set of projects to review.

- Examine the defect database and pareto the defects by amount of time to correct the issue, which involves categorizing and ranking the defects based on the principle that a small percentage of causes will yield a large percentage of the effects, allowing for prioritization of the most significant issues.

- For each of the defects in the top 80 percent, determine how a model-based method could have been employed to prevent the issue from occurring (along with an assessment of how practical it would have been to have done this).

- Summarize the effort that would have been saved, realistically, by using a model-based method, and use this summary to motivate the potential benefit for the investment.

Using this approach, a root cause analysis would assess where the issue could have been identified had model-based methods been used. This practice typically already exists for many organizations, where a defect found late in the lifecycle is characterized as an escape, and that this type of data is used to improve the quality of design reviews. The model-based methods enhance the ability to critically review the system and component designs as they evolve, and an escaped issue could be considered a failure of the model-based review.

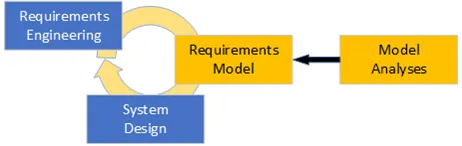

This process could be applied at the end of a project, or it could be done iteratively and recursively as the work progresses. Figure 3 shows how a model could be used to iterate different technical solutions as requirements are elaborated and a system design is emerging. Model analyses could be applied to the model as it evolves to predict system characteristics, such as performance, safety, and security.

Figure 3: Feedback Loop Incorporating Model-Based Methods

In the context of Figure 3, there would be similar use of model-based methods at the next level of the design (i.e., system and/or software architecture). A mature development practice would perform root cause analysis of any issues found downstream with the goal of understanding whether this issue could have been found in the prior step (i.e., requirements analysis).

Acceptance by Analogy

Yet another way to rationalize the decision to adopt model-based analysis methods for embedded CPS software systems is to examine the experiences of similar applications of these methods in other domains. As noted earlier, the introduction of model-based methods in fields such as mechanics, thermodynamics, electromagnetic spectrum, electrical engineering, logistics, maintenance, process optimization, and manufacturing have had a transformative effect on the way we do business. This approach accepts that the underlying benefit from applying a model-based approach will occur analogously in embedded computing resources for CPS as it does in the other domains.

Recommendations for Acquirers

When establishing a new discipline within an acquisition organization, it’s necessary to focus not just on the specific practices that should be established but also on the care and feeding of those practices. Model-based analysis for embedded computing systems is no different. Acquisition programs reside within agencies or DoD program executive office (PEO) structures, and there needs support for the practice both at the program level and at the higher-echelon level:

- Continue to set expectations with contractors that model-based design and analysis will be required for current and future acquisitions. Use this as a driver to spur investment in model-based methods.

- Train staff on how to use the tooling to be able to effectively review, verify, and validate contractor model-based deliverables.

- Build an enterprise-level competency for model-based methods to establish consistency across programs, and collect lessons learned for future process enhancement.

- Build the supporting infrastructure (digital engineering environment) to provide the capability to collect and analyze contractor deliverables.

Recommendations for Contractors

Winning the hearts and minds of all practitioners, from managers to engineers, will be extremely challenging. In particular, the effort required to build a predictive architectural virtual integration model early in the lifecycle will likely be viewed by management as an unnecessary expense, because the model-based methods in question have not been justified with ROI, and the shifting left of effort means less effort will be available when the real hardware and software show up in the systems integration laboratory (SIL).

After the culture has been established, and the team has accepted that model-based methods will improve the likelihood of success, they will need to determine how to apply the methods to improve the existing development process. At this point, they will need to establish the root cause analysis practice when defect escapes are found downstream to improve the model-based processes, as follows:

- Establish a culture to enable the model-based methods to thrive and add value.

- Establish how the model-based methods are to be implemented.

- Train staff on how to use the tools to perform the new practices.

- Develop a strategy for model management when working with heterogenous teams of contractors. Don’t assume that it’s my way or the highway.

- Take a critical look at the defect resolution process. Examine the criteria for when root cause analyses are performed. Use the results of the root cause analyses to spur innovation with the model-based development methods.

- Establish a project post-mortem process.

- Establish a plan for how to account for the added costs and measuring the value received from applying model-based methods to the existing process.

Better Design, Better Cyber-Physical Systems

We do not live in a perfect world, but we do trust our teams and their processes to produce high-quality designs. When we improve our design process, it is usually because we have identified new methods that enhance our understanding of the problem space. Who would argue that properly using models and analytical methods to provide higher-fidelity design verification could possibly be more expensive than not doing so? Yes, the work to create, verify, validate, and apply the models will cost more, but when problems and computing-resource constraints are found early in the development process, organizations can avoid more expensive rework and possibly provide enhanced capability for new implementations.

Obtaining these benefits is why we advocate that projects build virtual architectural models early in the system development lifecycle of the CPS they are developing. Although this method will indeed lead to higher initial development costs, ROI is not a useful way to assess the value of adopting MBSE practices for CPS. Post-mortem analysis, analogy, or a simple leap of faith based on a review of the use of MBSE practices in other fields offer better methods of evaluation.

In our vision of the development environment of the future, early architectural models of the CPS, coupled with model-based analysis methods, will be applied iteratively and recursively from requirements analysis to product design, virtual integration, and testing. As design decisions are made, the architectural model fidelity will be increased, enabling more accurate estimates of computing resource performance. Eventually the practical application of the model will be replaced by physical hardware and software in a laboratory environment (i.e., a SIL), but architectural models will be kept up to date as issues are found and resolved. After the CPS is completed, the models will be maintained and used to assess the impact of potential changes, possibly as part of a system upgrade.