Having the right analytics backend for your real-time application makes all the difference when it comes to how much time your team spends managing and maintaining the underlying infrastructure.

Today, distributed systems that used to require a lot of manual intervention can often be replaced by more operationally efficient solutions. One example of this evolution is the move from Elasticsearch—which has been a great open-source, full-text search and analytics engine—to a low-ops alternative in Rockset.

Both Rockset and Elasticsearch are queryable datastores that allow users to access and index data easily. Both systems are document-sharded, which allows developers to easily scale horizontally. Both rely on indexing as a means to accelerate queries. But this is where the similarities between Elasticsearch and Rockset end.

Although Elasticsearch has been very popular for the last decade, it has limitations. Especially when it comes to managing real-time analytics. It’s memory intensive and is more difficult to maintain than newer options, like Rockset.

This article will be the first of a three-part series. Throughout these articles we will compare Rockset to Elasticsearch and explain how users can adopt Rockset where Elasticsearch doesn’t perform optimally.

In this particular article we will discuss the benefits Rockset offers developers from an operational perspective. We will look at the various ways Rockset’s design makes it easier to manage compared to Elasticsearch, as well as how it was designed to be more performant.

Real-Time Analytics Use Cases

Before going into the differences between Elasticsearch and Rockset, we’ll discuss some of the use cases that Rockset best serves.

First, Elasticsearch still plays an important role in use cases like text search and log analytics. However, Rockset is better suited to complex real-time search and analytics involving business data.

For example, Rockset is a great database and partners with customers building logistics management applications, real-time personalization, anomoly detection applications, and real-time customer 360 dashboards. Each of these applications requires a real-time component and often requires a business logic component. This leads to a need for more complex indexes, as well as the ability to write intricate logic, that Rockset makes easy to implement. Both would be more difficult to achieve in Elasticsearch.

Within this scope of use cases, we will focus specifically on the operational benefits Rockset can provide your development team.

Benefits of Rockset for Ops

Rockset’s design provides many benefits for developers who are looking for a real-time indexing database. As previously mentioned, Elasticsearch requires a lot of manual intervention. This means that in order to manage increasing volumes of requests and data, developers need to intervene to scale the Elasticsearch cluster.

In comparison, Rockset is a serverless database, meaning that there is no need for your developers to spend their time tinkering with clusters and infrastructure. This leads to a lower operational burden when developing on your real-time database.

This is not the only area where Rockset provides a low-ops benefit to your development teams. Rockset also helps manage your indexes and data shards automatically.

Many of the benefits Rockset offers come from a cloud-native architecture approach. Elasticsearch doesn’t have this benefit, as it was created in 2010—during the data center era, before infrastructure was as cloud-focused as it is today. As a result, Elasticsearch wasn’t able to take advantage of many of the operational benefits of cloud that Rockset has.

Decoupling Compute and Storage

Many of Rockset’s operational benefits are tied to its design. Rockset has taken advantage of decoupling compute and storage to improve performance.

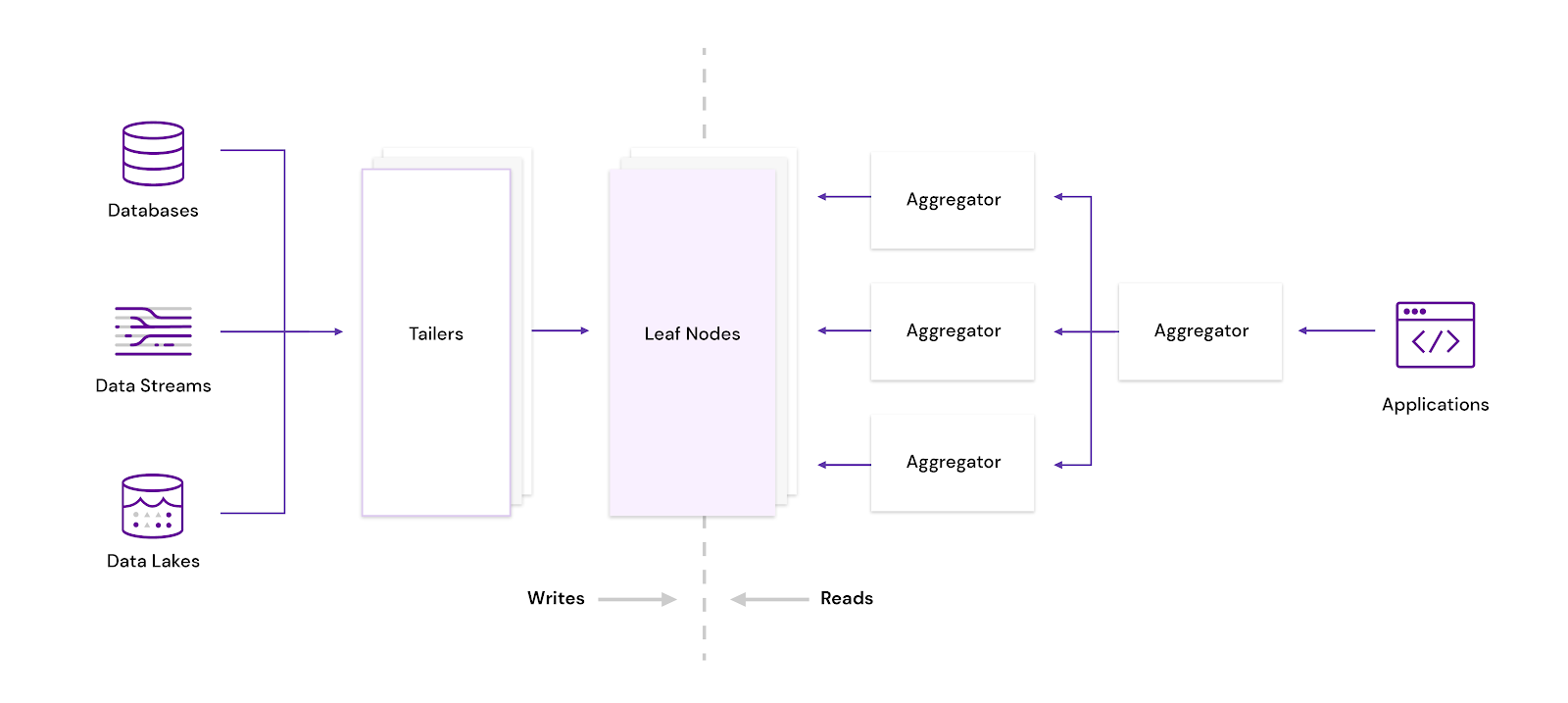

Figure 1: Rockset’s cloud-native architecture, decoupling ingest compute (Tailers), storage (Leaf Nodes), and query compute (Aggregators)

If the idea of decoupling compute and storage is new to you, there are many modern software architectures that utilize this technique. For example, the Snowflake data warehouse uses a similar concept. This means that Rockset can scale storage and compute separately, taking full advantage of cloud elasticity. In contrast, Elasticsearch follows the pattern of more traditional big data systems like Hadoop and shared-nothing MPP systems, which tie storage and compute together and scale in fixed storage-to-compute ratios.

What does Rockset’s storage-compute separation mean in practice? Instead of being forced to scale both compute and storage together, which isn’t efficient, Rockset offers its users the ability to scale the amount of compute to support more query volume or complexity, or scale the amount of storage to handle greater data volume as needed.

This doesn’t just lead to performance improvements; this also allows Rockset users to fine-tune costs. Developers can precisely control the level of compute their workload requires and change it over time to better manage price-performance. In addition, there is no need to grapple with the perennial problem of poor hardware utilization that arises from provisioning for peak usage and scaling in fixed storage-compute ratios.

By using hardware more efficiently and removing the need for capacity planning and manual optimization of infrastructure costs, Rockset 50% lower total cost of ownership (TCO) than Elasticsearch environments.

Separation of Durability and Performance

Another operational advantage of Rockset is that it leverages the cloud’s shared-storage abilities to the fullest by separating durability from performance.

By storing all of the data in cloud storage (S3, GCS, etc.) it is made more durable. This also allows Rockset to limit how often it creates replicas. Rockset only needs to create a single replica on an SSD-based system to serve data in a performant manner when the query or update volume of an index increases.

In contrast, Elasticsearch uses a shared-nothing storage architecture which relies on replication to guarantee data durability. Two or three replicas of Elasticsearch data are typically used for durability and availability even if the query volume is not high. Configuring and managing replication in an Elasticsearch cluster is operational overhead that can be avoided when using Rockset instead.

Automatic Sharding, No Reindexing

Elasticsearch requires heavy intervention when it comes to indexing data. Designed on a document-shard architecture, the number of shards determines the maximum number of nodes on which the dataset can be hosted.

The major issue arises when the index needs to grow. If the underlying dataset, and its shards, is already distributed among all of the various underlying nodes, the queries you are running will slow.

This slowdown will get worse until developers have no option but to create a new index. This can lead to increased costs and require manual intervention from developers who need to kick off the non-trivial task of re-indexing.

Compare this to Rockset. Indexes on Rockset are developed to easily scale up to hundreds of terabytes without any need to reindex a dataset.

A Rockset index uses microshards. Thousands of microshards are combined to create the optimal number of shards based on the number of servers available and the total size of the index. As datasets increase in size, Rockset will redistribute the microshards as needed, as well as automatically spread the shards to the new machines without manual intervention.

The Operational Benefits of Serverless

Deploying Elasticsearch is not an easy task. It requires a lot of configuring and knowledge of the software. For example, Elasticsearch requires developers to configure master nodes, data nodes, ingest nodes, coordinating nodes, and alerting nodes.

Each of these different nodes plays a different role and requires specific configuring to optimize your team’s Elasticsearch clusters. Overall, the management of these clusters and nodes, along with controlling cost with hot-warm-cold nodes, can become an operational burden.

In contrast, Rockset’s serverless architecture removes all of the operational cost related to managing infrastructure and tuning performance. Rockset seamlessly autoscales storage and compute resources in the cloud, so developers don’t have to take on the responsibility of cluster scaling. There is no need to spend time capacity planning and understanding the intricacies of sharding, replication, and indexing. Thanks to automated management of clusters, shards, indexes, and data retention based on policies set by the user, developers simply connect their data sources to Rockset and run high-performance queries out of the box.

Given the low-ops option that Rockset provides, software development teams can avoid the challenges of managing Elasticsearch. Instead, they can rely on Rockset to provide a serverless database that automatically scales and doesn’t require all of the manual fine-tuning that Elasticsearch does.

Overall, Rockset’s modern cloud-based architecture provides multiple operational benefits that make it a prudent choice when you need to serve low-latency queries to power your app.

Elasticsearch Is No Longer Your Only Option

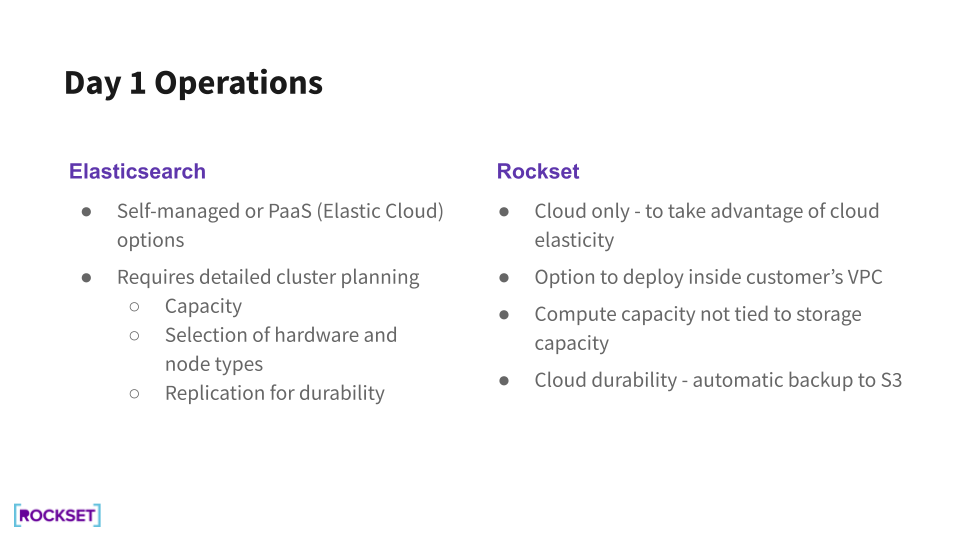

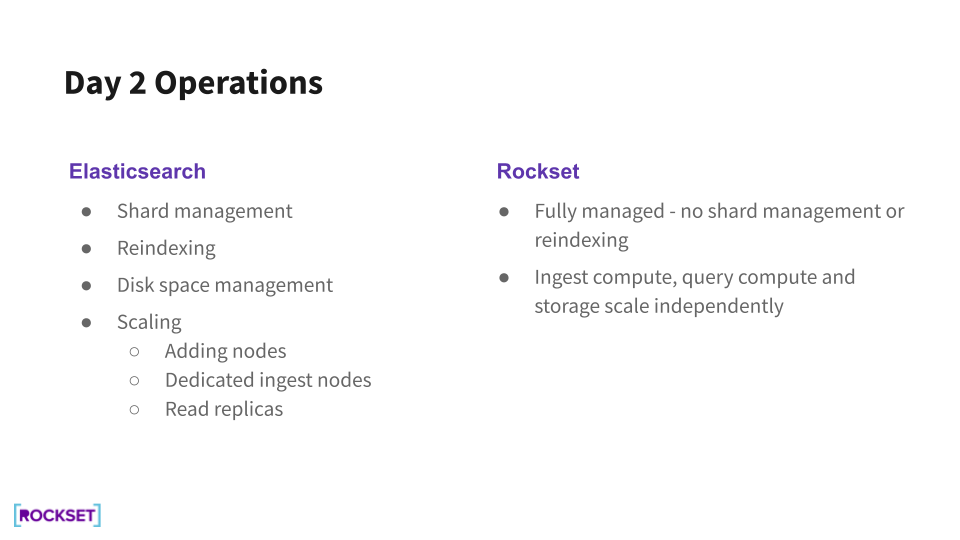

Elasticsearch continues to be an important tool for text search and log analytics. However, Rockset is a low-ops alternative for many search applications, combining serverless architecture with automated indexing, sharding, and scaling. These features provide multiple advantages to the developers managing the operational side of applications. Many of these advantages across Day 1 and Day 2 operations are highlighted in the following summary:

All in all, Rockset is a great fit for enterprises looking to quickly implement new features as well as start-ups looking to develop their modern applications.

Explore more of the architectural differences in the Elasticsearch vs Rockset white paper and migration to Rockset in 5 Steps to Migrate from Rockset to Elasticsearch blog.

Other blogs in this Elasticsearch or Rockset for Real-Time Analytics series: