[ad_1]

Systems such as Gmail, YouTube and Google Play rely on text classification models to identify harmful content including phishing attacks, inappropriate comments, and scams. These types of texts are harder for machine learning models to classify because bad actors rely on adversarial text manipulations to actively attempt to evade the classifiers. For example, they will use homoglyphs, invisible characters, and keyword stuffing to bypass defenses.

To help make text classifiers more robust and efficient, we’ve developed a novel, multilingual text vectorizer called RETVec (Resilient & Efficient Text Vectorizer) that helps models achieve state-of-the-art classification performance and drastically reduces computational cost. Today, we’re sharing how RETVec has been used to help protect Gmail inboxes.

Strengthening the Gmail Spam Classifier with RETVec

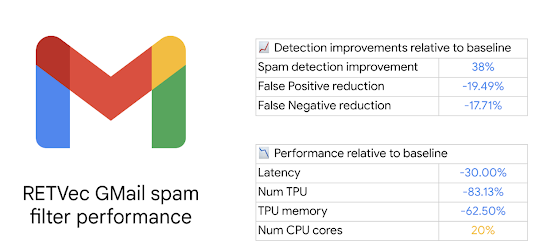

Figure 1. RETVec-based Gmail Spam filter improvements.

Over the past year, we battle-tested RETVec extensively inside Google to evaluate its usefulness and found it to be highly effective for security and anti-abuse applications. In particular, replacing the Gmail spam classifier’s previous text vectorizer with RETVec allowed us to improve the spam detection rate over the baseline by 38% and reduce the false positive rate by 19.4%. Additionally, using RETVec reduced the TPU usage of the model by 83%, making the RETVec deployment one of the largest defense upgrades in recent years. RETVec achieves these improvements by sporting a very lightweight word embedding model (~200k parameters), allowing us to reduce the Transformer model’s size at equal or better performance, and having the ability to split the computation between the host and TPU in a network and memory efficient manner.

RETVec Benefits

RETVec achieves these improvements by combining a novel, highly-compact character encoder, an augmentation-driven training regime, and the use of metric learning. The architecture details and benchmark evaluations are available in our NeurIPS 2023 paper and we open-source RETVec on Github.

Due to its novel architecture, RETVec works out-of-the-box on every language and all UTF-8 characters without the need for text preprocessing, making it the ideal candidate for on-device, web, and large-scale text classification deployments. Models trained with RETVec exhibit faster inference speed due to its compact representation. Having smaller models reduces computational costs and decreases latency, which is critical for large-scale applications and on-device models.

Figure 1. RETVec architecture diagram.

Models trained with RETVec can be seamlessly converted to TFLite for mobile and edge devices, as a result of a native implementation in TensorFlow Text. For web application model deployment, we provide a TensorflowJS layer implementation that is available on Github and you can check out a demo web page running a RETVec-based model.

Figure 2. Typo resilience of text classification models trained from scratch using different vectorizers.

RETVec is a novel open-source text vectorizer that allows you to build more resilient and efficient server-side and on-device text classifiers. The Gmail spam filter uses it to help protect Gmail inboxes against malicious emails.

If you would like to use RETVec for your own use cases or research, we created a tutorial to help you get started.

This research was conducted by Elie Bursztein, Marina Zhang, Owen Vallis, Xinyu Jia, and Alexey Kurakin. We would like to thank Gengxin Miao, Brunno Attorre, Venkat Sreepati, Lidor Avigad, Dan Givol, Rishabh Seth and Melvin Montenegro and all the Googlers who contributed to the project.

[ad_2]