A Brief History of Distributed Databases

The era of Web 2.0 brought with it a renewed interest in database design. While traditional RDBMS databases served well the data storage and data processing needs of the enterprise world from their commercial inception in the late 1970s until the dotcom era, the large amounts of data processed by the new applications—and the speed at which this data needs to be processed—required a new approach. For a great overview on the need for these new database designs, I highly recommend watching the presentation, Stanford Seminar – Big Data is (at least) Four Different Problems, that database guru Michael Stonebraker delivered for Stanford’s Computer Systems Colloquium. The new databases that have emerged during this time have adopted names such as NoSQL and NewSQL, emphasizing that good old SQL databases fell short when it came to meeting the new demands.

Despite their different design choices for particular protocols, these databases have adopted, for the most part, a shared-nothing, distributed computing architecture. While the processing power of every computing system is ultimately limited by physical constraints and, in cases such as distributed databases where parallel executions are involved, by the implications of Amdahl’s law, most of these systems offer the theoretical possibility of unlimited horizontal capacity scaling for both compute and storage. Each node represents a unit of compute and storage that can be added to the system as needed.

However, as Cockroach Labs CEO and co-founder Spencer Kimball explains in the video, The Architecture of a Modern Database: CockroachDB Beta, in the case of CockroachDB, designing one of these new databases from scratch is a herculean task that requires highly knowledgeable and skillful engineers working in coordination and making very carefully thought decisions. For databases such as CockroachDB, having a reliable, high-performance way to store and retrieve data from stable storage is essential. Designing a library that provides fast stable storage leveraging either filesystem or raw devices is a very difficult problem because of the elevated number of edge cases that are required to get right.

Providing Fast Storage with RocksDB

RocksDB is a library that solves the problem of abstracting access to local stable storage. It allows software engineers to focus their energies on the design and implementation of other areas of their systems with the peace of mind of relying on RocksDB for access to stable storage, knowing that it currently runs some of the most demanding database workloads anywhere on the planet at Facebook and other equally challenging environments.

The advantages of RocksDB over other store engines are:

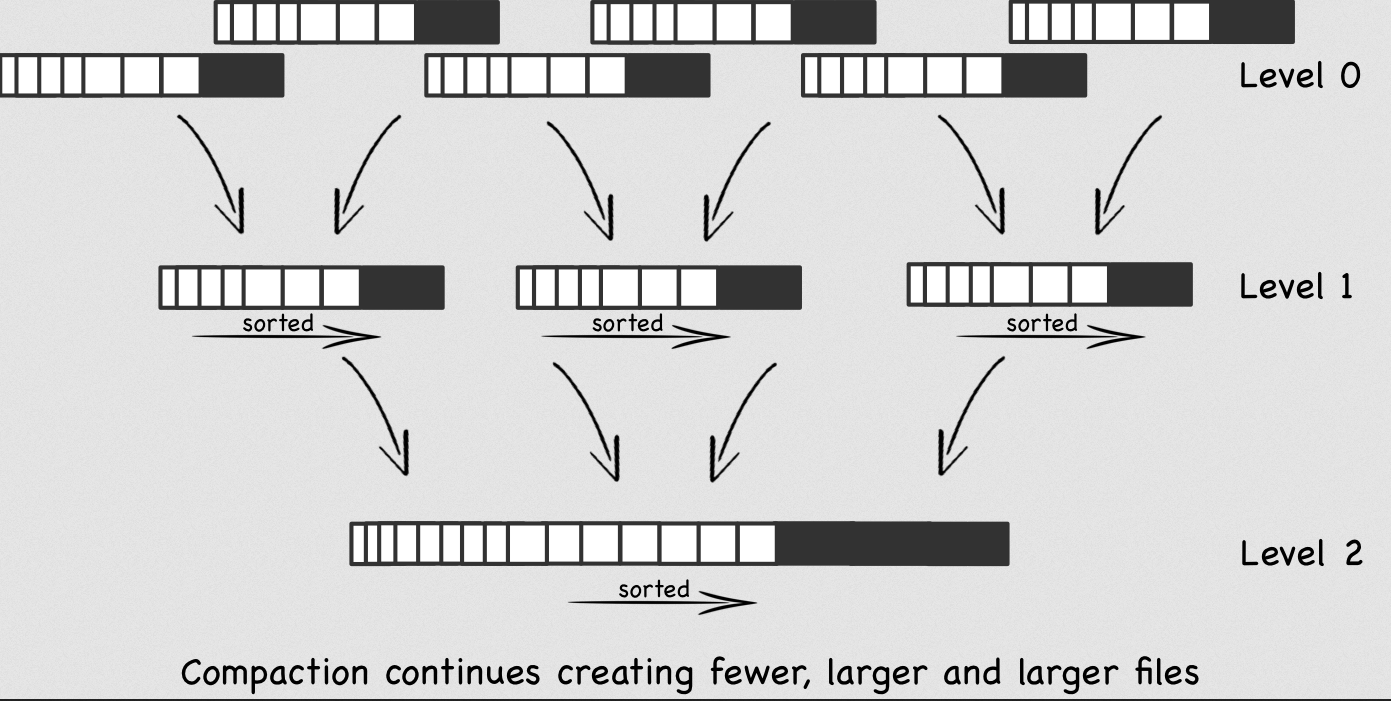

Technical design. Because one of the most common use cases of the new databases is storing data that is generated by high-throughput sources, it is important that the store engine is able to handle write-intensive workloads, all while offering acceptable read performance. RocksDB implements what is known in the database literature as a log-structured merge tree aka LSM tree. Going into the details of LSM trees, and RocksDB’s implementation of the same, is out of the scope of this blog, but suffice it to say that it’s an indexing structure optimized to handle high-volume—sequential or random—write workloads. This is accomplished by treating every write as an append operation. A mechanism, that goes by the name of compaction runs—transparently for the developer—in the background, removing data that is no longer relevant such as deleted keys or older versions of valid keys.

Source: http://www.benstopford.com/2015/02/14/log-structured-merge-trees/

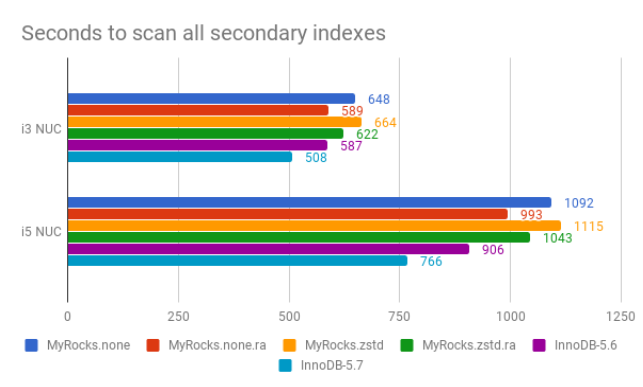

Through the clever use of bloom filters, RocksDB also offers great read performance making RocksDB the ideal candidate on which to base distributed databases. The other popular choice to base storage engines on is b-trees. InnoDB, MySQL’s default storage engine, is an example of a store engine implementing a b-tree derivative, in particular, what is known as a b+tree.

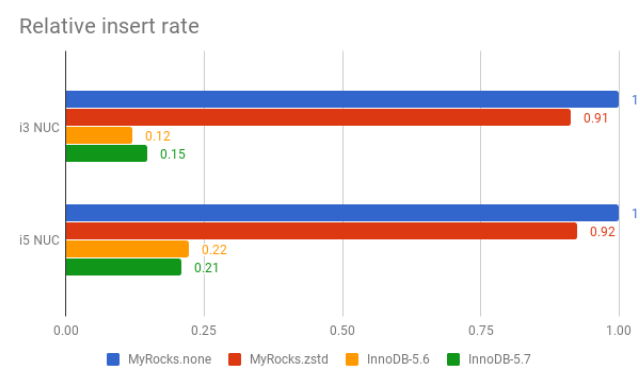

Performance. The choice of a given technical design for performance reasons needs to be backed with empirical verification of the choice. During his time at Facebook, in the context of the MyRocks project, a fork of MySQL that replaces InnoDB with RocksDB as MySQL’s storage engine, Mark Callaghan performed extensive and rigorous performance measurements to compare MySQL performance on InnoDB vs on RocksDB. Details can be found here. Not surprisingly, RocksDB regularly comes out as vastly superior in write-intensive benchmarks. Interestingly, while InnoDB was also regularly better than RocksDB in read-intensive benchmarks, this advantage, in relative terms, was not as big as the advantage RocksDB provides in the case of write-intensive tasks over InnoDB. Here is an example in the case of a I/O bound benchmark on Intel NUC:

Source: https://smalldatum.blogspot.com/2017/11/insert-benchmark-io-bound-intel-nuc.html

Tunability. RocksDB provides several tunable parameters to extract the best performance on different hardware configurations. While the technical design provides an architectural reason to favor one type of solution over another, achieving optimal performance on particular use cases usually requires the flexibility of tuning certain parameters for those use cases. RocksDB provides a long list of parameters that can be used for this purpose. Samsung’s Praveen Krishnamoorthy presented at the 2015 annual meetup an extensive study on how RocksDB can be tuned to accommodate different workloads.

Manageability. In mission-critical solutions such as distributed databases, it is essential to have as much control and monitoring capabilities as possible over critical components of the system, such as the storage engine in the nodes. Facebook introduced several important improvements to RocksDB, such as dynamic option changes and the availability of detailed statistics for all aspects of RocksDB internal operations including compaction, that are required by enterprise grade software products.

Production references. The world of enterprise software, particularly when it comes to databases, is extremely risk averse. For totally understandable reasons—risk of monetary losses and reputational damage in case of data loss or data corruption—nobody wants to be a guinea pig in this space. RocksDB was developed by Facebook with the original motivation of switching the storage engine of its massive MySQL cluster hosting its user production database from InnoDB to RocksDB. The migration was completed by 2018 resulting in a 50% storage savings for Facebook. Having Facebook lead the development and maintenance of RocksDB for its most critical use cases in their multibillion dollar business is a very important endorsement, particularly for developers of databases that lack Facebook’s resources to develop and maintain their own storage engines.

Language bindings. RocksDB offers a key-value API, available for C++, C and Java. These are the most widely used programming languages in the distributed database world.

When considering all these 6 areas holistically, RocksDB is a very appealing choice for a distributed database developer looking for a fast, production tested storage engine.

Who Uses RocksDB?

Over the years, the list of known uses of RocksDB has increased dramatically. Here is a non-exhaustive list of databases that embed RocksDB that underscores its suitability as a fast storage engine:

While all these database providers probably have similar reasons for picking RocksDB over other options, Instagram’s replacement of Apache Cassandra’s own Java written LSM tree with RocksDB, which is now available to all other users of Apache Cassandra, is significant. Apache Cassandra is one of the most popular NoSQL databases.

RocksDB has also found wide acceptance as an embedded database outside the distributed database world for equally important, mission-critical use cases:

- Kafka Streams – In the Apache Kafka ecosystem, Kafka Streams is a client library that is commonly used to build applications and microservices that consume and produce messages stored in Kafka clusters. Kafka Streams supports fault-tolerant stateful applications. RocksDB is used by default to store state in such configurations.

- Apache Samza – Apache Samza offers similar functionality as Kafka Streams and it also uses RocksDB to store state in fault-tolerant configurations.

- Netflix – After looking at several options, Netflix picked RocksDB to support their SSD caching needs in their global caching system, EVCache.

- Santander UK – Cloudera Professional Services built a near-real-time transactional analytics system for Santander UK, backed by Apache Hadoop, that implements a streaming enrichment solution that stores its state on RocksDB. Santander Group is one of Spain’s largest multinational banks. As of this writing, its revenues are close to 50 billion euros with assets under management approaching 1.5 trillion euros.

- Uber – Cherami is Uber’s own durable distributed messaging system equivalent to Amazon’s SQS. Cherami chose to use RocksDB as their storage engine in their storage hosts for its performance and indexing features.

RocksDB: Powering High-Performance Distributed Data Systems

From its beginnings as a fork of LevelDB, a key-value embedded store developed by Google infrastructure experts Jeff Dean and Sanjay Ghemawat, through the efforts and hard work of the Facebook engineers that transformed it into an enterprise-class solution apt for running mission-critical workloads, RocksDB has been able to gain widespread acceptance as the storage engine of choice for engineers looking for a battle-tested embedded storage engine.

Learn how Rockset uses RocksDB:

Ethan is a software engineering professional. Based in Silicon Valley, he has worked at numerous industry-leading companies and startups: Hewlett Packard—including their world-renowned research organization HP Labs—TIBCO Software, Delphix and Cape Analytics. At TIBCO Software he was one of the key contributors to the re-design and implementation of ActiveSpaces, TIBCO’s distributed in-memory data grid. Ethan holds Masters (2007) and PhD (2012) degrees in Electrical Engineering from Stanford University.